Identifying Malware with Machine Learning

View on GitHub

There's a nice paper titled Behavioral Malware Detection Using Deep Graph Convolutional Neural Networks about using machine learning to classify pieces of software as goodware or malware based on a sequence of API calls. This project is my attempt at performing the same classification task as the paper using the same dataset.

The Machine Learning model that I chose to use was a Decision Tree classifier, as I thought this would work well with the dataset's large number of categorical features. This is, of course, much simpler than the model trained in the paper, but part of me was curious to see how well I could do with a much simpler model.

The first thing to note about this dataset is that it contains about 42,797 malware samples and only 1,079 goodware samples. This is a pretty big problem: training a model to classify every sample as malware regardless of its sequence of API calls would give you about 97.5% accuracy on this dataset. I dealt with this by undersampling the dataset to get an equal number of malware samples and goodware samples.

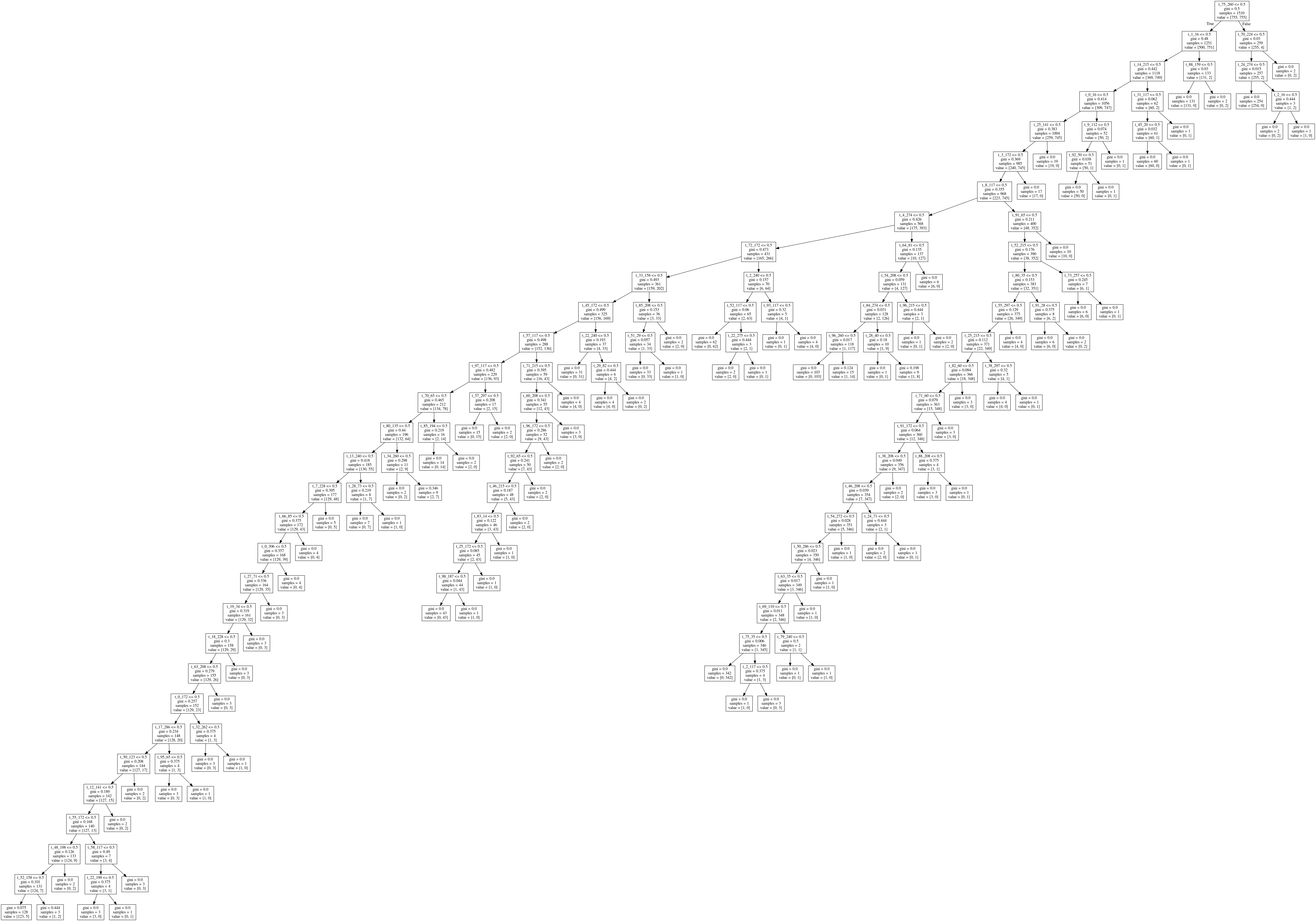

Next, I tuned my decision tree's hyperparameters using a similar method to the paper. I removed 30% of the undersampled data to be test data, leaving me 70% for training. I then used a grid search with 5-fold cross-validation with my training data to choose the hyperparameters. Here is a visualization of the resulting decision tree:

Once I found this tree as the best classifier, I tested it against my test data. Here are the results:

| My Model | Paper's Model | |

|---|---|---|

| AUC-ROC | 0.8657 | 0.9729 |

| F1-Score | 0.8664 | 0.9201 |

| Precision | 0.8624 | 0.9216 |

| Recall | 0.8704 | 0.9186 |

| Accuracy | 0.8657 | 0.9244 |

I'm very happy with this, especially since the paper states that tuning their models' hyperparameters took 60 hours, while my classifier can be tuned in minutes.